csKT: Addressing Cold-start Problem in Knowledge Tracing via Kernel Bias and Cone Attention

We added csKT into our pyKT package.

The link is here and the API is here.

Original paper can be found at Bai Y, Li X, Liu Z, et al. “csKT: Addressing Cold-start Problem in Knowledge Tracing via Kernel Bias and Cone Attention.” Proceedings of the Expert Systems with Applications. 2025.

Title: csKT: Addressing Cold-start Problem in Knowledge Tracing via Kernel Bias and Cone Attention

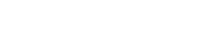

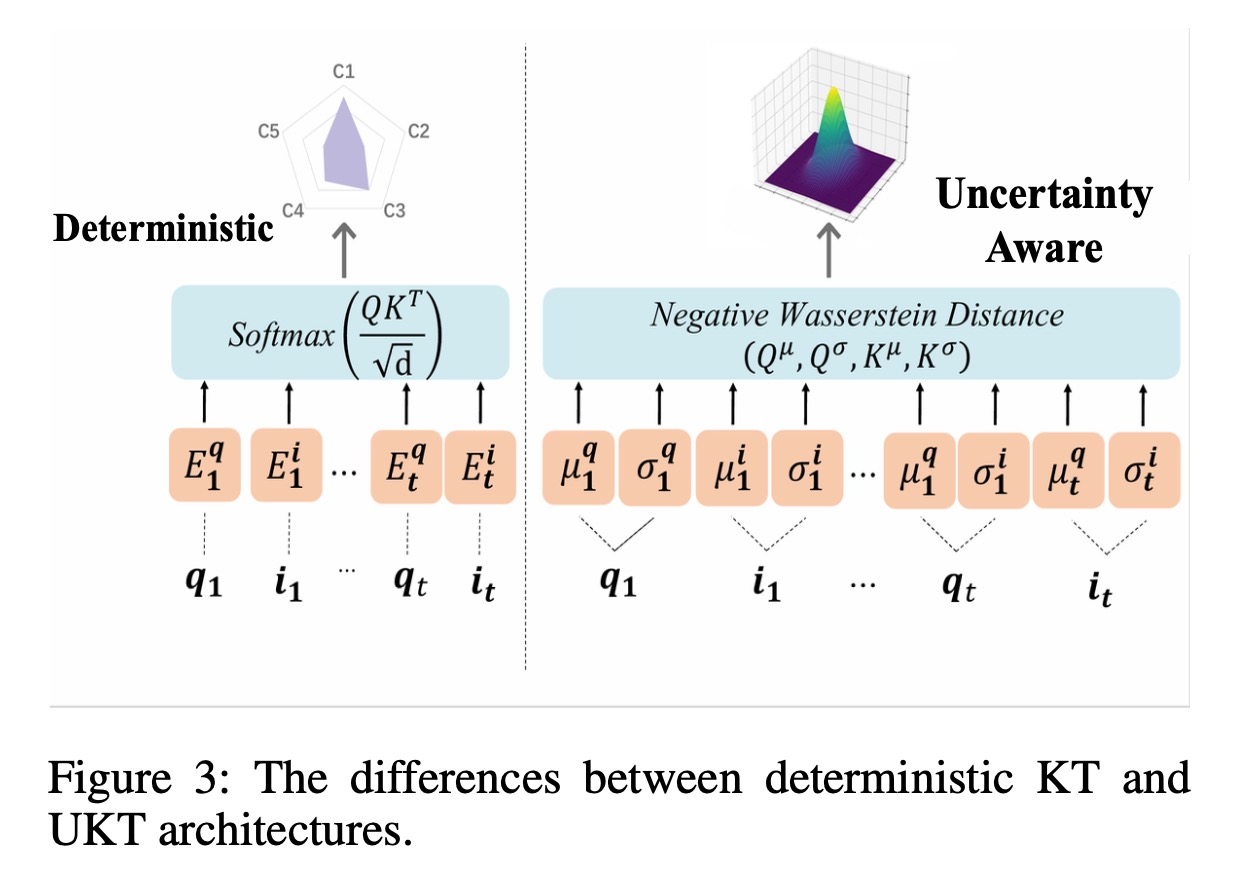

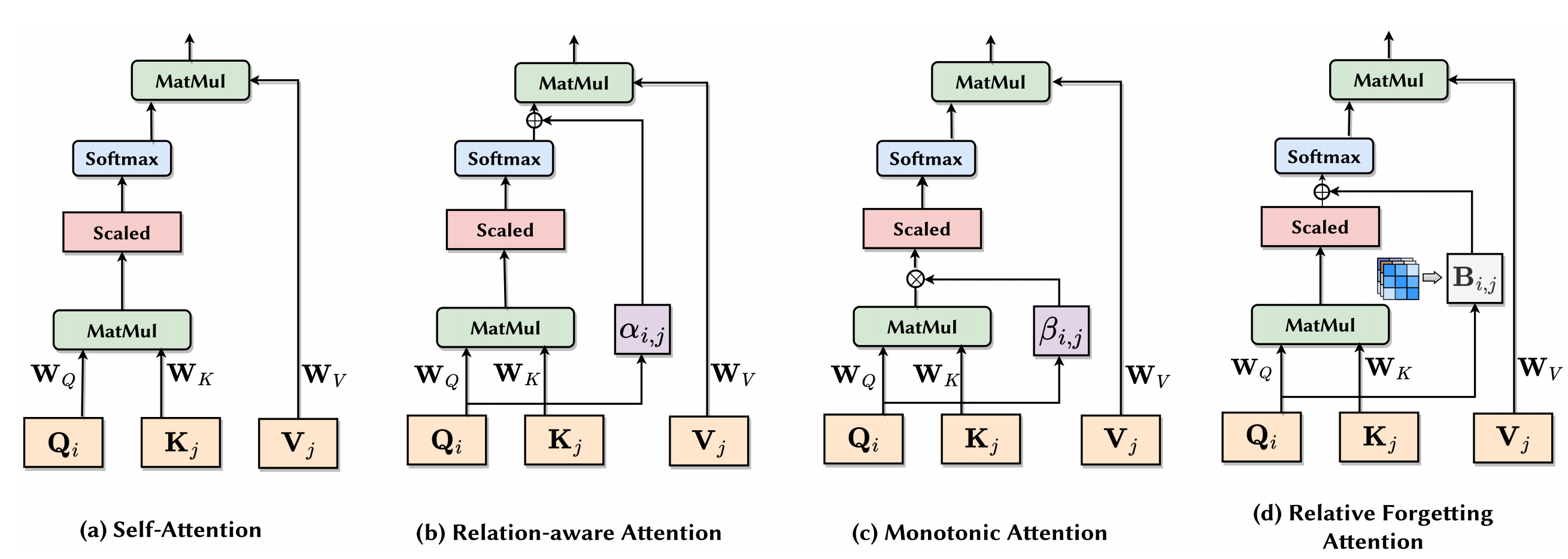

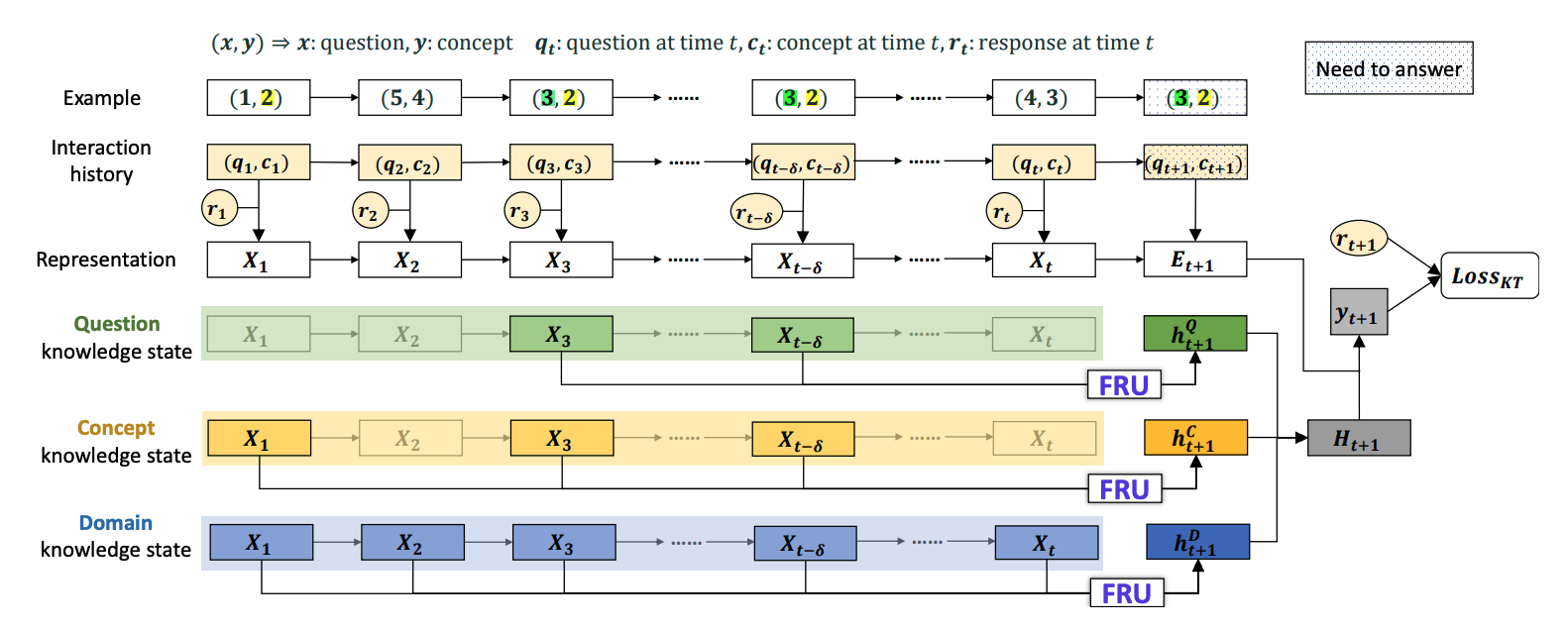

Abstract: Knowledge tracing (KT) is the task of predicting students’ future performances based on their past interactions in online learning systems. When new students enter the system with short interaction sequences, the cold-start problem commonly arises in KT. Although existing deep learning based KT models exhibit impressive performance, it remains challenging for these models to be trained on short student interaction sequences and maintain stable prediction accuracy as the number of student interactions increases. In this paper, we propose cold-start KT (csKT) to address this problem. Specifically, csKT employs kernel bias to guide learning from short sequences and ensure accurate predictions for longer sequences, and it also introduces cone attention to better capture complex hierarchical relationships between knowledge components in cold-start scenarios. We evaluate csKT on four public real-world educational datasets, where it demonstrated superior performance over a broad range of deep learning based KT models using common evaluation metrics in cold-start scenarios. Additionally, we conduct ablation studies and produce visualizations to verify the effectiveness of our csKT model.