Rethinking and Improving Student Learning and Forgetting Processes for Attention Based Knowledge Tracing Models

We added LefoKT into our pyKT package.

The link is here and the API is here.

Original paper can be found at Bai Y, Li X, Liu Z, et al. “Rethinking and Improving Student Learning and Forgetting Processes for Attention Based Knowledge Tracing Models.” Proceedings of the 39th Annual AAAI Conference on Artificial Intelligence. 2025.

Title: Rethinking and Improving Student Learning and Forgetting Processes for Attention Based Knowledge Tracing Models

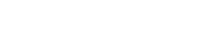

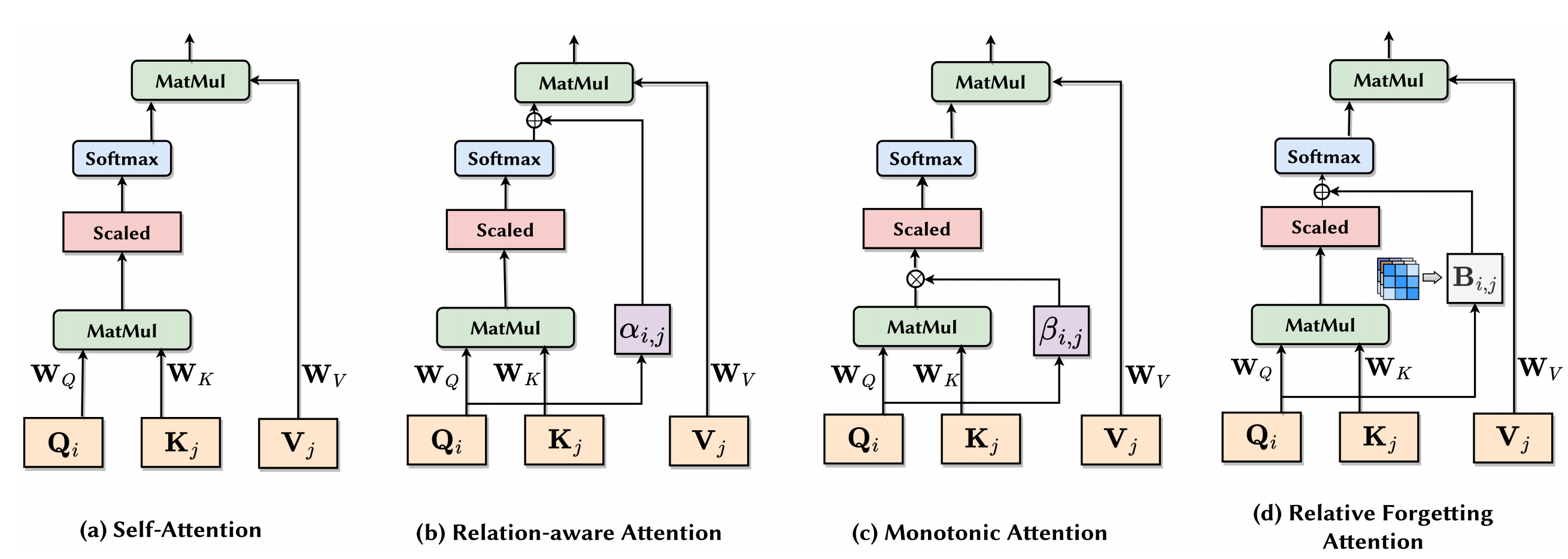

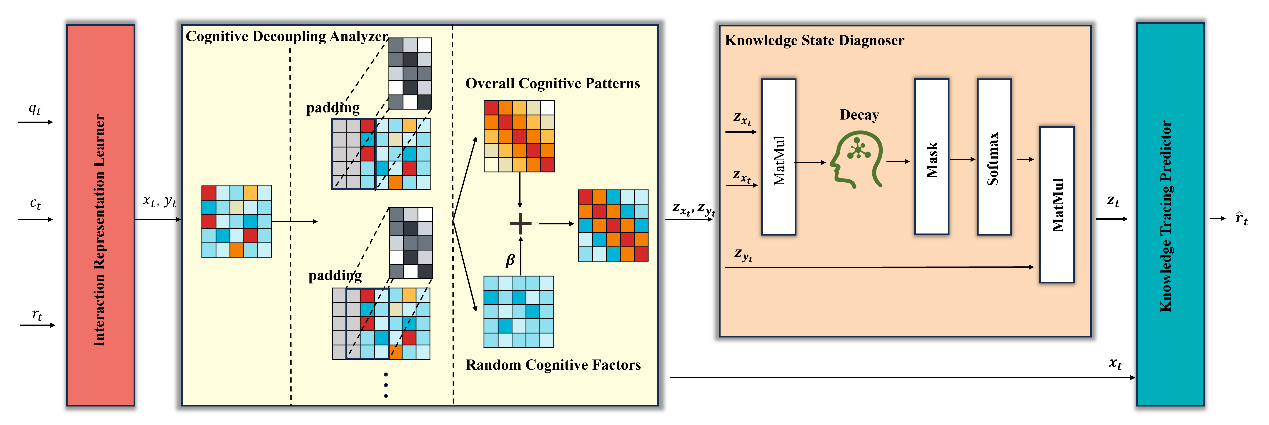

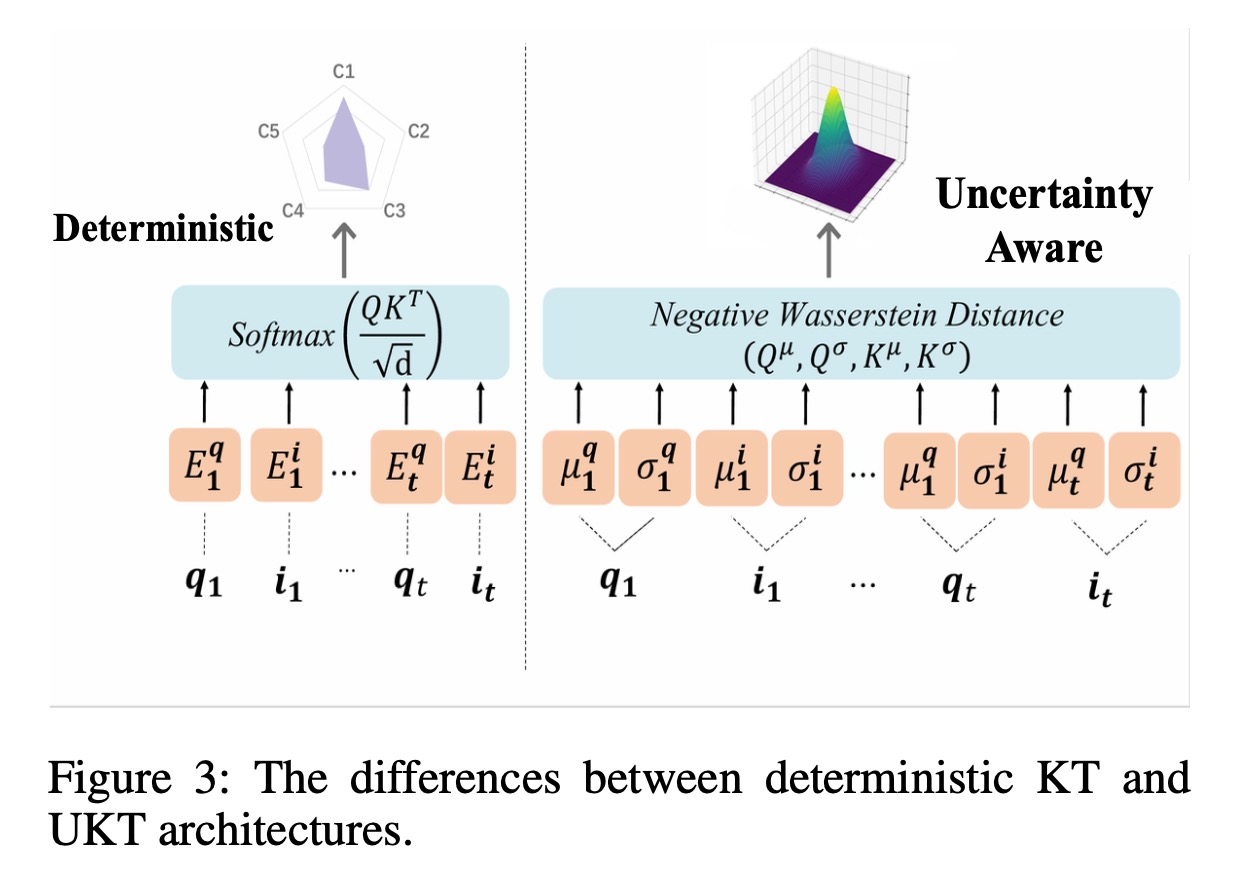

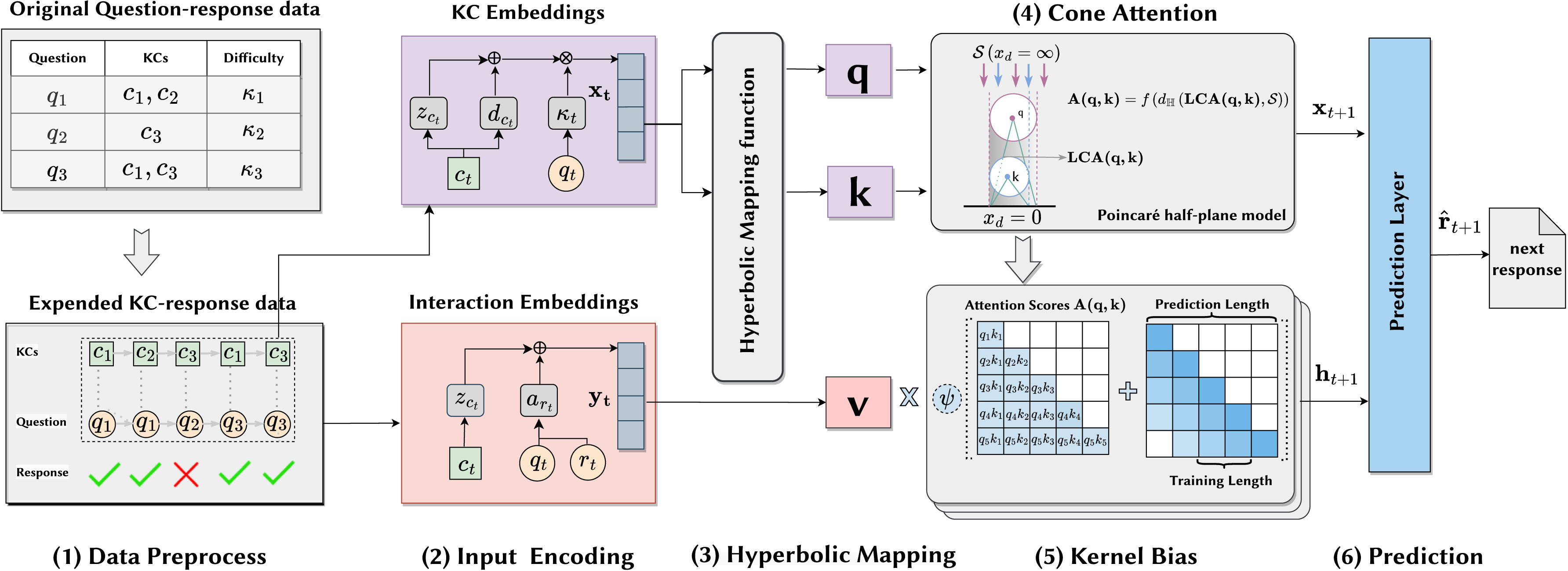

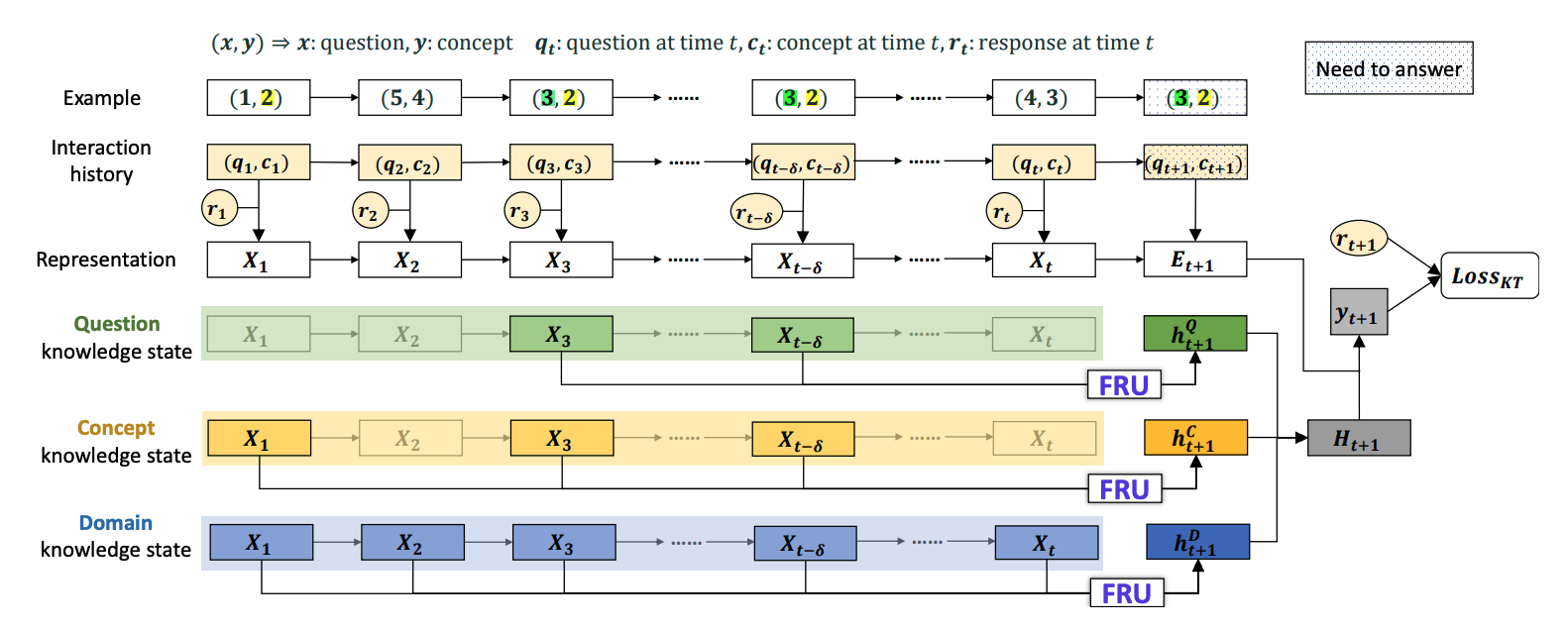

Abstract: Knowledge tracing (KT) models students’ knowledge states and predicts their future performance based on their historical interaction data. However, attention based KT models struggle to accurately capture diverse forgetting behaviors in ever-growing interaction sequences. First, existing models use uniform time decay matrices, conflating forgetting representations with problem relevance. Second, the fixed-length window prediction paradigm fails to model continuous forgetting processes in expanding sequences. To address these challenges, this paper introduces LefoKT, a unified architecture that enhances attention based KT models by incorporating proposed relative forgetting attention. LefoKT improves forgetting modeling through relative forgetting attention to decouple forgetting patterns from problem relevance. It also enhances attention based KT models’ length extrapolation capability for capturing continuous forgetting processes in ever-growing interaction sequences. Extensive experimental results on three datasets validate the effectiveness of LefoKT.