extraKT: Extending Context Window of Attention Based Knowledge Tracing Models via Length Extrapolation

We added extraKT into our pyKT package.

The link is here and the API is here.

Original paper can be found at Li X, Bai Y, Guo T, et al. “Extending Context Window of Attention Based Knowledge Tracing Models via Length Extrapolation.” Proceedings of the 26th European Conference on Artificial Intelligence. 2024.

Title: extraKT: Extending Context Window of Attention Based Knowledge Tracing Models via Length Extrapolation

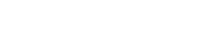

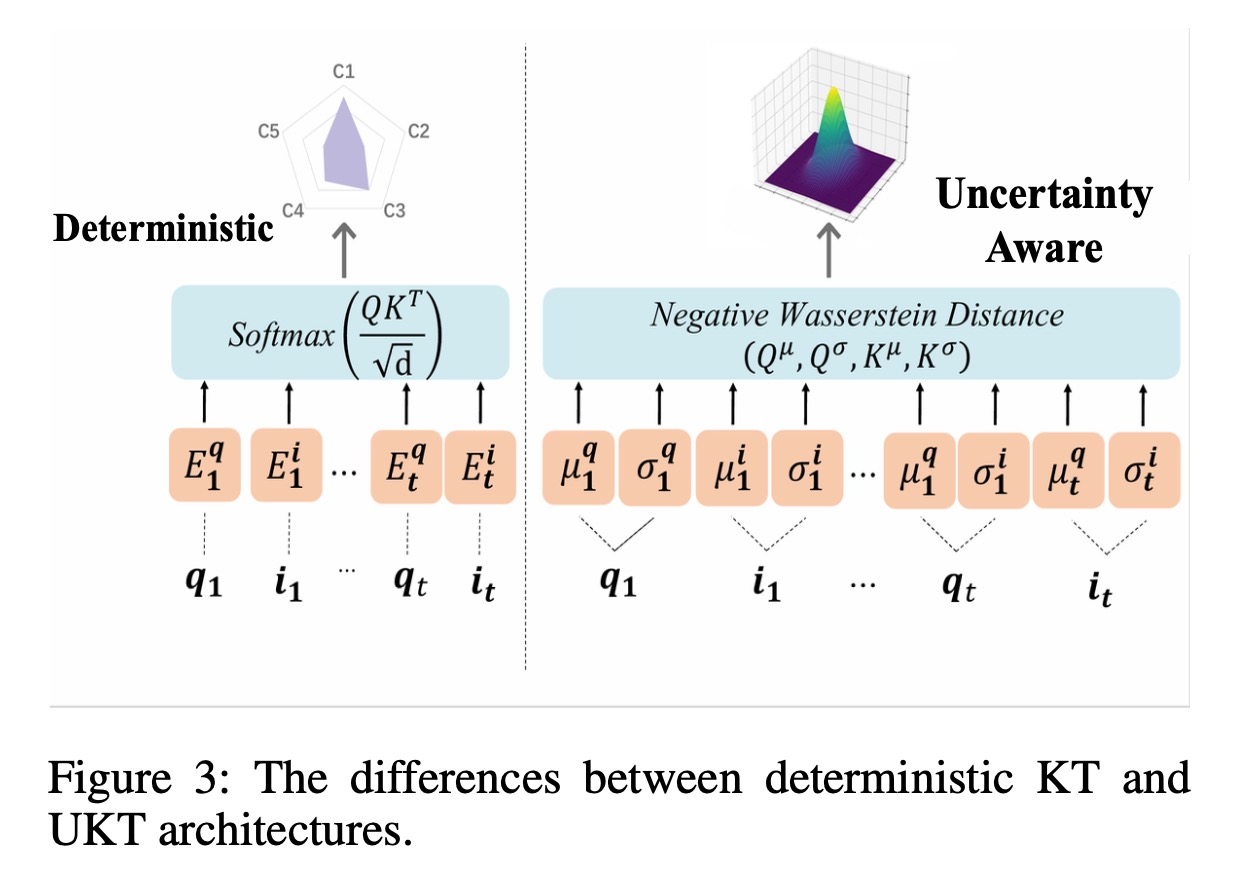

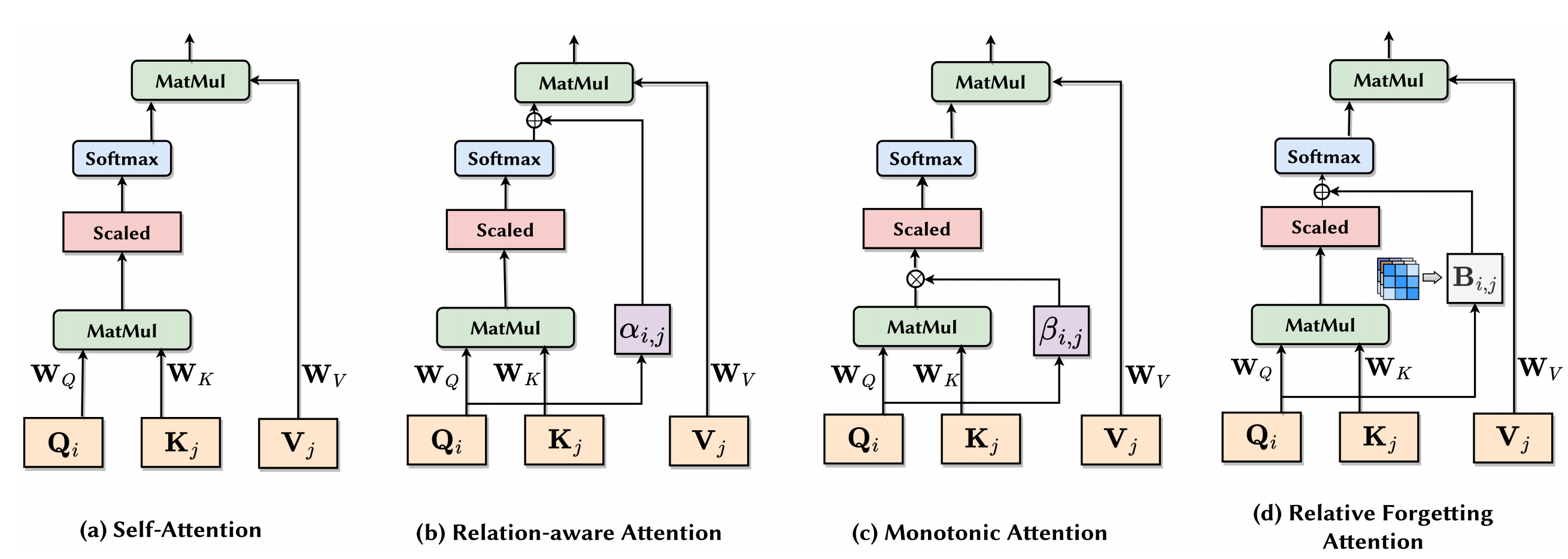

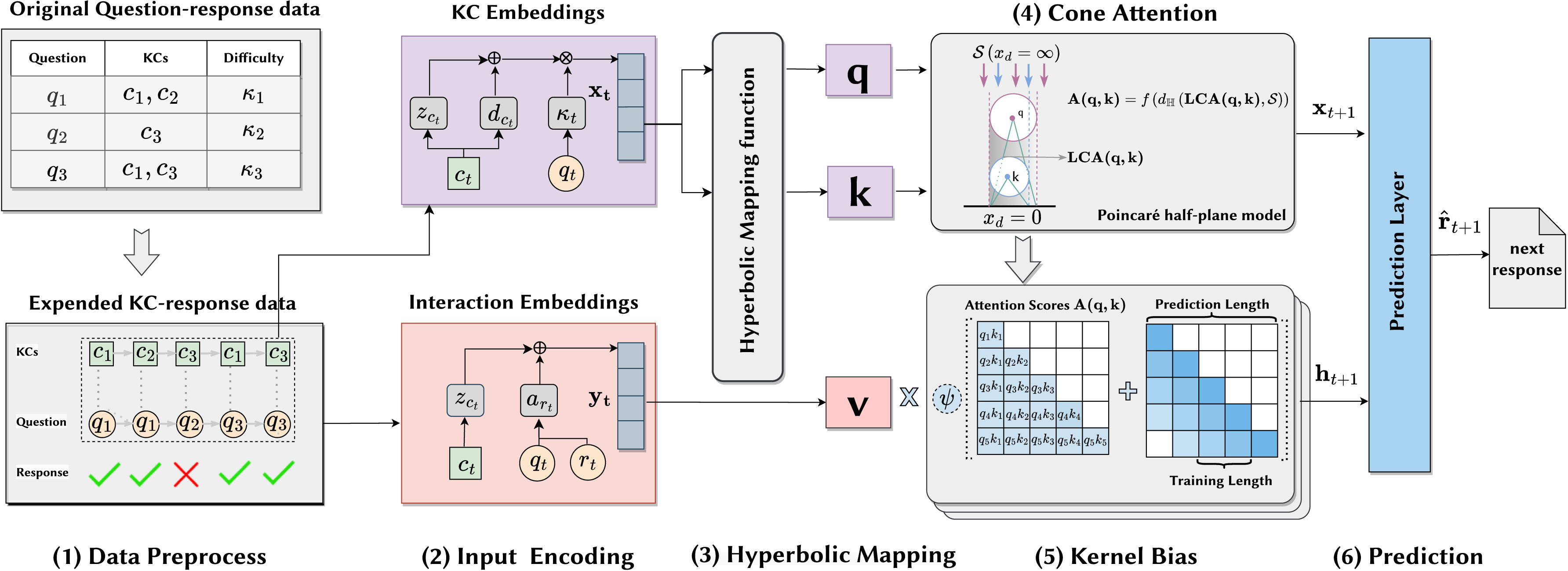

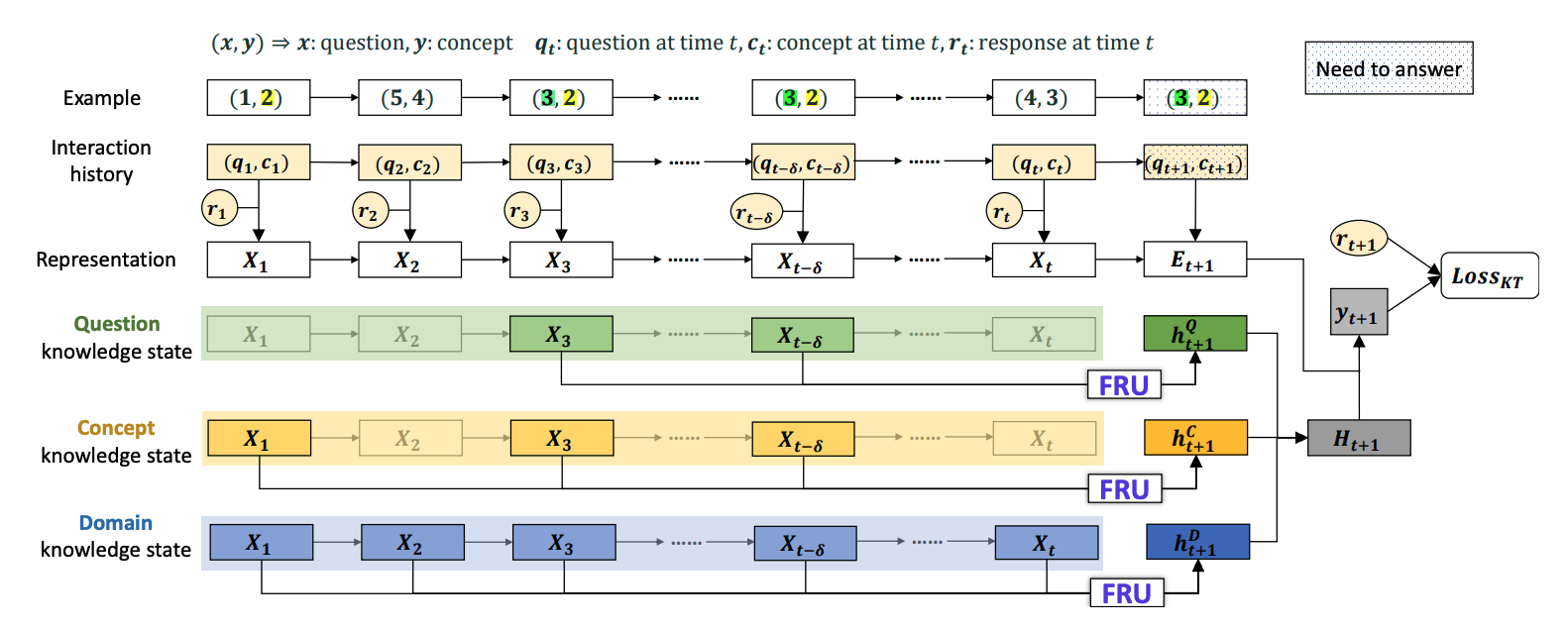

Abstract: Knowledge tracing (KT) is a prediction task that aims to predict students’ future performance based on their past learning data. The rapid progress in attention mechanisms has led to the emergence of various high-performing attention based KT models. However, in online or personalized education settings, students’ varying learning paths result in different lengths of student interaction sequences, which poses a significant challenge for attention based KT models as their context window sizes are fixed during both training and prediction stages. We refer to this as the length extrapolation of KT model. In this paper, we propose extraKT to facilitate better extrapolation that learn from student interactions with a short context window and continue to perform well across various longer context window sizes at prediction stage. Specifically, we negatively bias attention scores with linearly decreasing penalties that are proportional to query-key distance, which efficiently represents short-term forgetting characteristics of student knowledge states. We conduct comprehensive and rigorous experiments on three real-world educational datasets. The results show that our extraKT model exhibits robust length extrapolation capability and outperforms state-of-the-art baseline models in terms of AUC and accuracy.