FoLiBiKT: Forgetting-aware Linear Bias for Attentive Knowledge Tracing

We added FoLiBiKT into our pyKT package.

The link is here and the API is here.

Original paper can be found at Im, Yoonjin, et al. “Forgetting-aware Linear Bias for Attentive Knowledge Tracing.” Proceedings of the 32nd ACM International Conference on Information and Knowledge Management. 2023.

Title: FoLiBiKT: Forgetting-aware Linear Bias for Attentive Knowledge Tracing

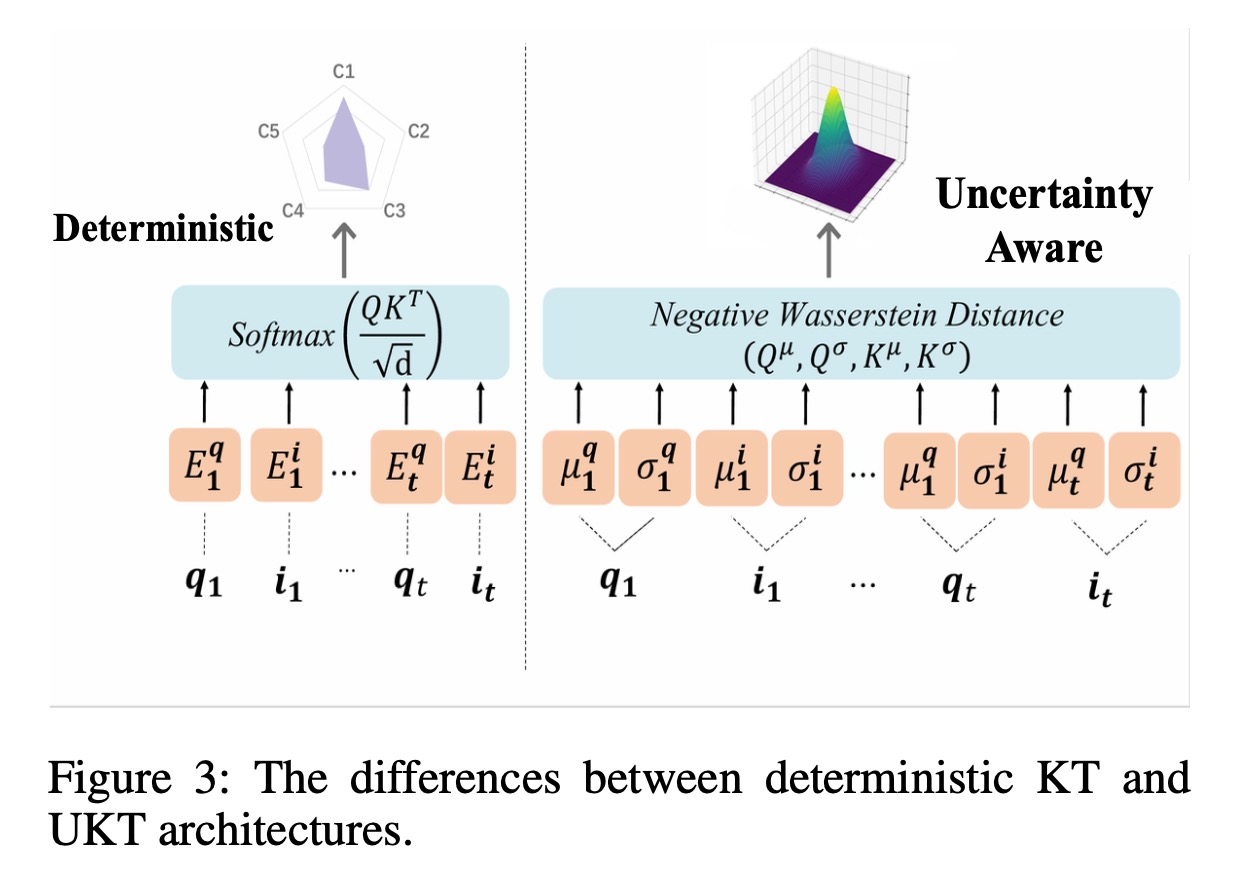

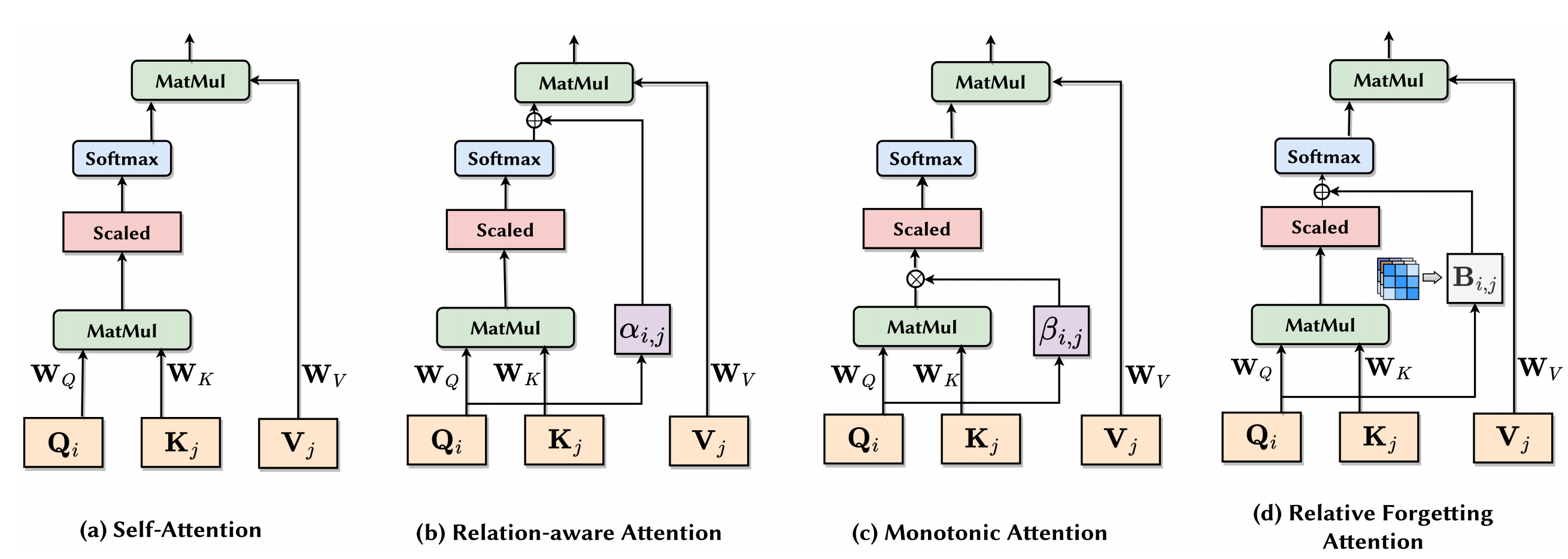

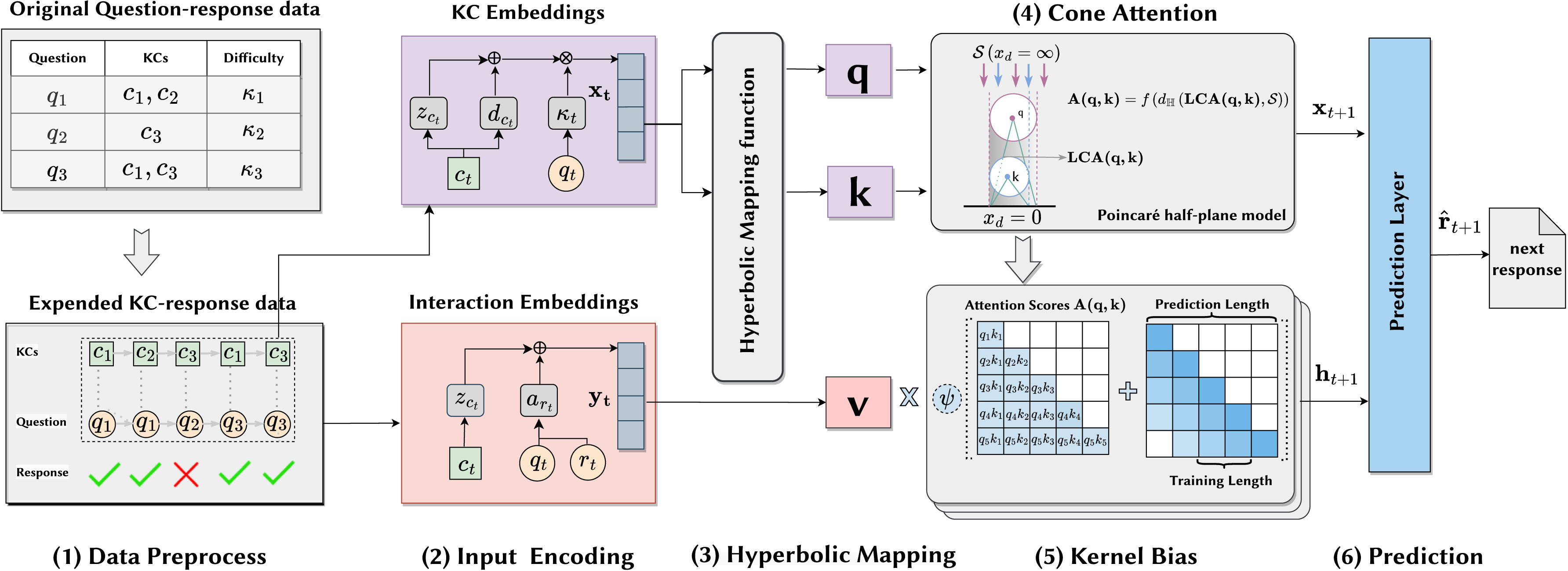

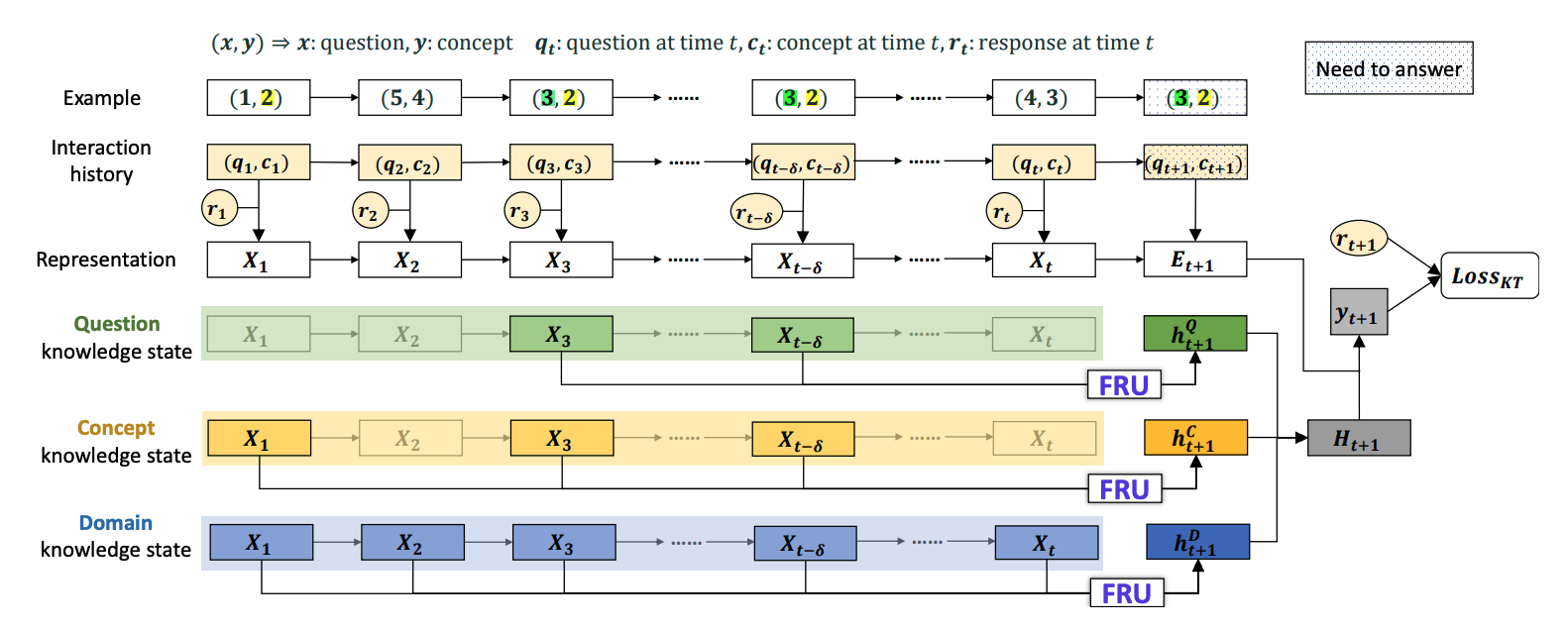

Abstract: Knowledge Tracing (KT) aims to track proficiency based on a question-solving history, allowing us to offer a streamlined curriculum. Recent studies actively utilize attention-based mechanisms to capture the correlation between questions and combine it with the learner’s characteristics for responses. However, our empirical study shows that existing attention-based KT models neglect the learner’s forgetting behavior, especially as the interaction history becomes longer. This problem arises from the bias that overprioritizes the correlation of questions while inadvertently ignoring the impact of forgetting behavior. This paper proposes a simple-yet-effective solution, namely Forgetting-aware Linear Bias (FoLiBi), to reflect forgetting behavior as a linear bias. Despite its simplicity, FoLiBi is readily equipped with existing attentive KT models by effectively decomposing question correlations with forgetting behavior. FoLiBi plugged with several KT models yields a consistent improvement of up to 2.58% in AUC over state-of-the-art KT models on four benchmark datasets.