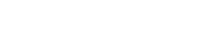

SKVMN: Sequential Key-Value Memory Networks

We added SKVMN into our pyKT package.

The link is here and the API is here .

Original paper can be found at Abdelrahman, Ghodai, and Qing Wang. “Knowledge tracing with sequential key-value memory networks.” Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval. 2019.

Title: Knowledge Tracing with Sequential Key-Value Memory Networks

Author: Ghodai Abdelrahman, Qing Wang

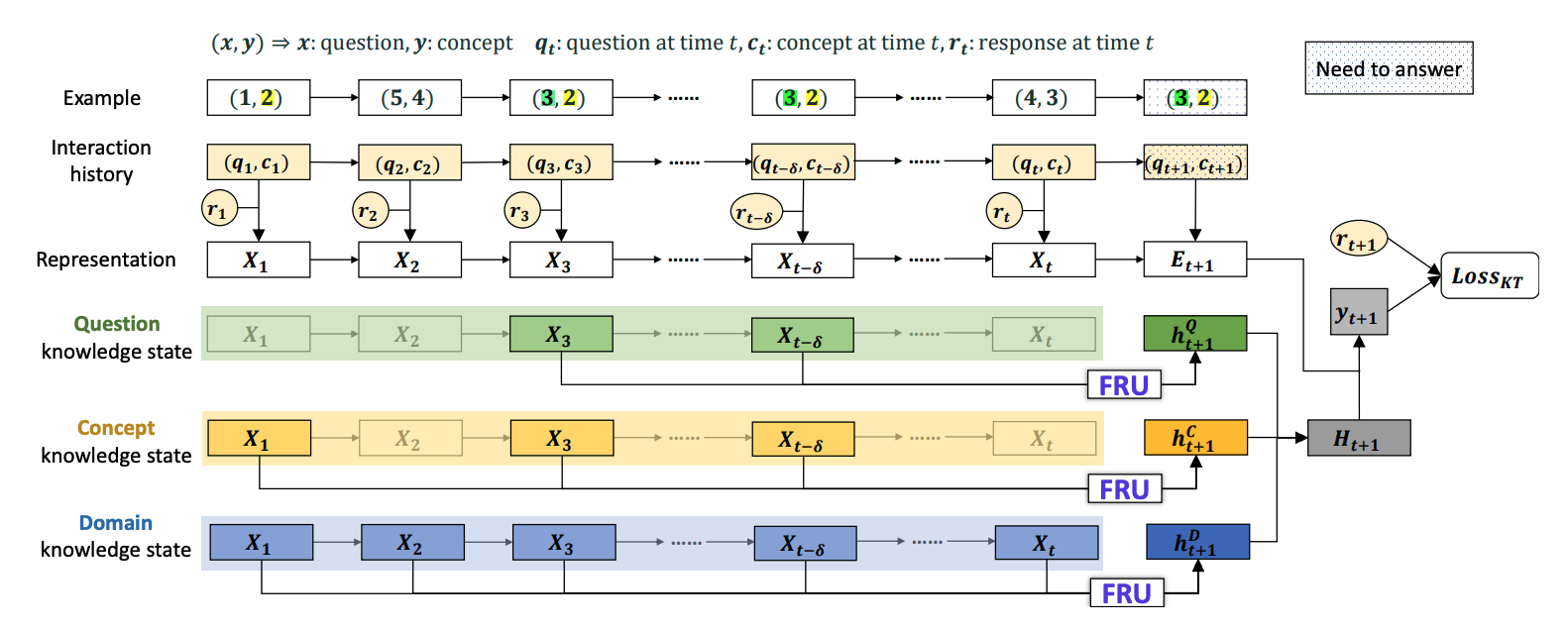

Abstract: Can machines trace human knowledge like humans? Knowledge tracing (KT) is a fundamental task in a wide range of applications in education, such as massive open online courses (MOOCs), intelligent tutoring systems, educational games, and learning management systems. It models dynamics in a student’s knowledge states in relation to different learning concepts through their interactions with learning activities. Recently, several attempts have been made to use deep learning models for tackling the KT problem. Although these deep learning models have shown promising results, they have limitations: either lack the ability to go deeper to trace how specific concepts in a knowledge state are mastered by a student, or fail to capture long-term dependencies in an exercise sequence. In this paper, we address these limitations by proposing a novel deep learning model for knowledge tracing, namely Sequential Key-Value Memory Networks (SKVMN). This model unifies the strengths of recurrent modelling capacity and memory capacity of the existing deep learning KT models for modelling student learning. We have extensively evaluated our proposed model on five benchmark datasets. The experimental results show that (1) SKVMN outperforms the state-of-the-art KT models on all datasets, (2) SKVMN can better discover the correlation between latent concepts and questions, and (3) SKVMN can trace the knowledge state of students dynamics, and a leverage sequential dependencies in an exercise sequence for improved predication accuracy.